If you’re diving into the world of AI models—especially Transformers like GPT, BERT, or T5—you’ve probably heard this before:

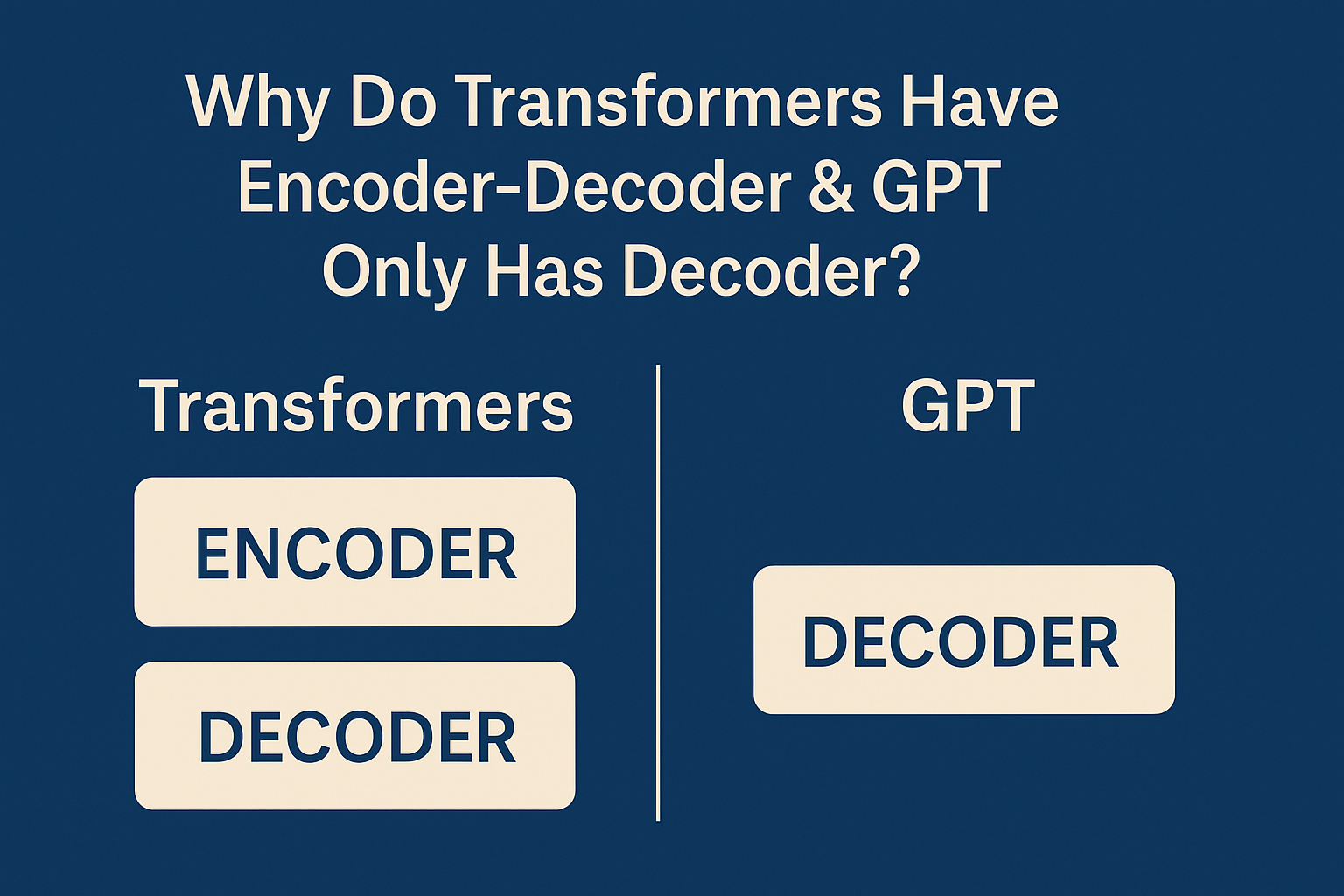

“GPT is decoder-only, while Transformers have both an encoder and a decoder.”

Sounds a bit confusing, right? Let’s break it down with real-life context so it actually makes sense.

The Story of Transformers: The Original Design

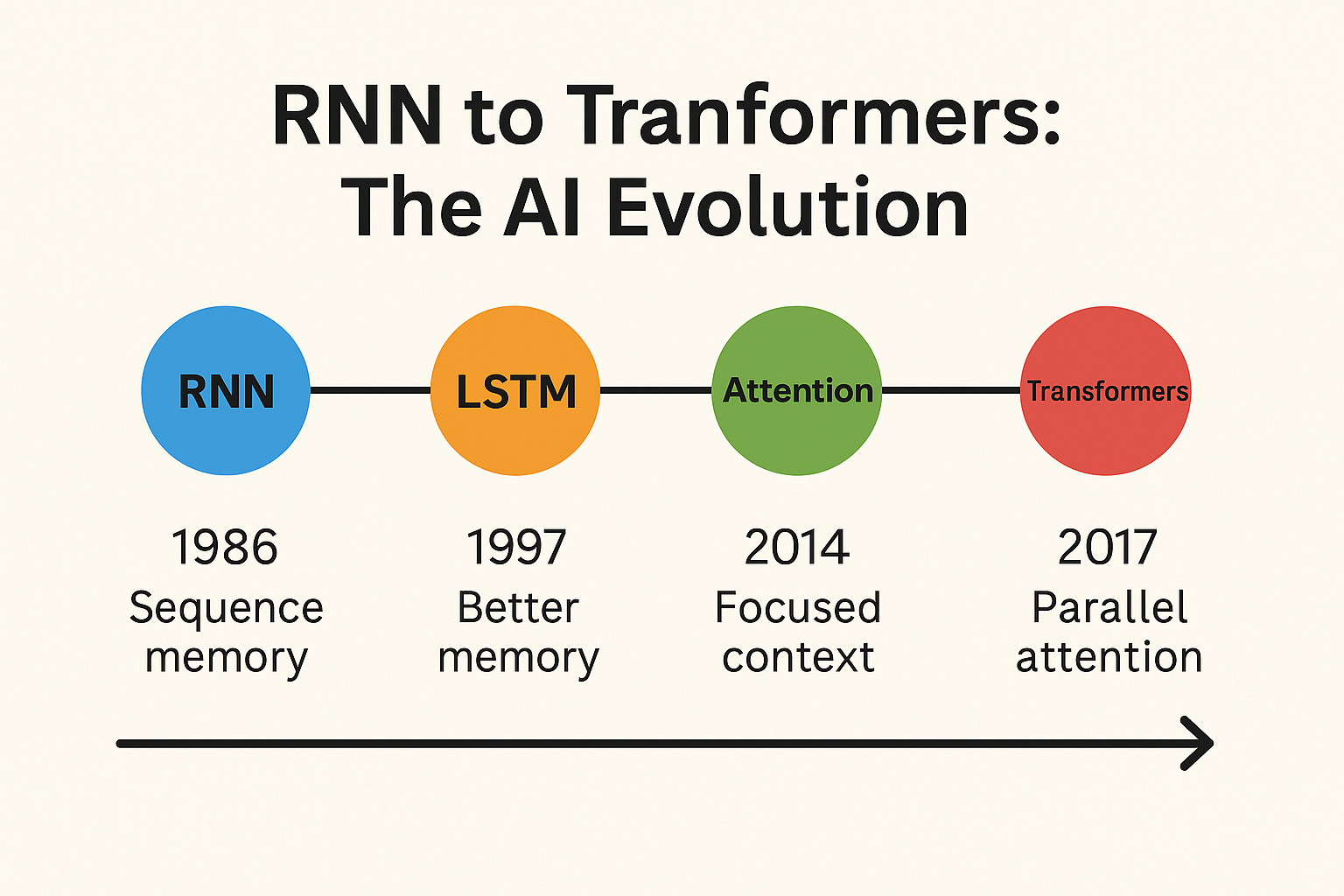

Back in 2017, when Vaswani and the team introduced Transformers, they created a model with two core parts:

Encoders: Read and understand the input (like a document, a question, or a sentence). Decoders: Take that understanding and generate an output (like a translation, a summary, or an answer).

Think of it like a translator:

- The encoder listens and understands your words.

- The decoder speaks in the target language.

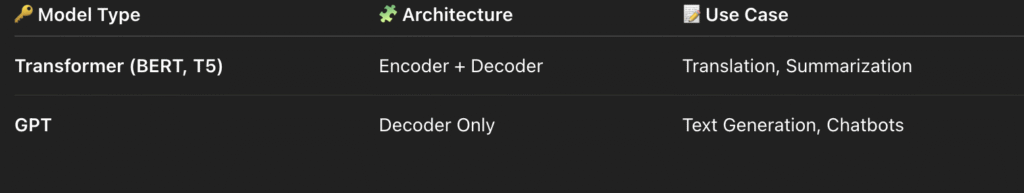

That’s why models like T5 and BART work so well for tasks like translation, summarization, and question answering. They need to fully grasp the input before generating a meaningful output.

Then Comes GPT: The Storyteller Model

GPT takes a different approach.

- It’s not trying to translate or summarize someone else’s words.

- It’s trying to generate text, continue conversations, or complete sentences—kind of like an AI storyteller.

So, GPT skips the encoder and only uses the decoder. It doesn’t need to process a separate input—it just builds on its own previous outputs to generate the next word.

Let’s Make It Super Simple

Real-World Analogy

- Encoder-Decoder (T5, BERT): Like a translator—you hear the sentence, process it, and respond.

- Decoder-Only (GPT): Like a storyteller—you get a prompt, and you keep adding new ideas as you go along.

Why Does It Matter?

GPT is faster for generation tasks—because it skips the extra step.

Encoder-Decoder models are better for understanding input—like translating or answering questions.

It’s all about the task at hand—one size doesn’t fit all!

What do you think about this design difference? Have you used both models in your projects? Let’s chat in the comments! 🚀 And hey, if you want to dive deeper into AI concepts, check out my blog:

#AI #GPT #Transformers #NLP #DeepLearning #MachineLearning #AIExplained #TechSimplified #LanguageModels #LLM